1 – Introduction

Having Power BI data models that don’t follow best practice in shared resources is penalizing the adoption by end users: Slow performance during the refresh or the analysis, usability, manageability, … But having data models on dedicated capacities not following best practices also implies unnecessary spending of money 💰 !

Having Power BI data models that don’t follow best practice in shared resources is penalizing the adoption by end users: Slow performance during the refresh or the analysis, usability, manageability, … But having data models on dedicated capacities not following best practices also implies unnecessary spending of money 💰 !

The presented solution in this article can be part of a global governance topics within the enterprise where data product manager/owner (Data Mesh) want to have an idea of the overall view of their Power BI data models quality and infuse standards of development following best practices.

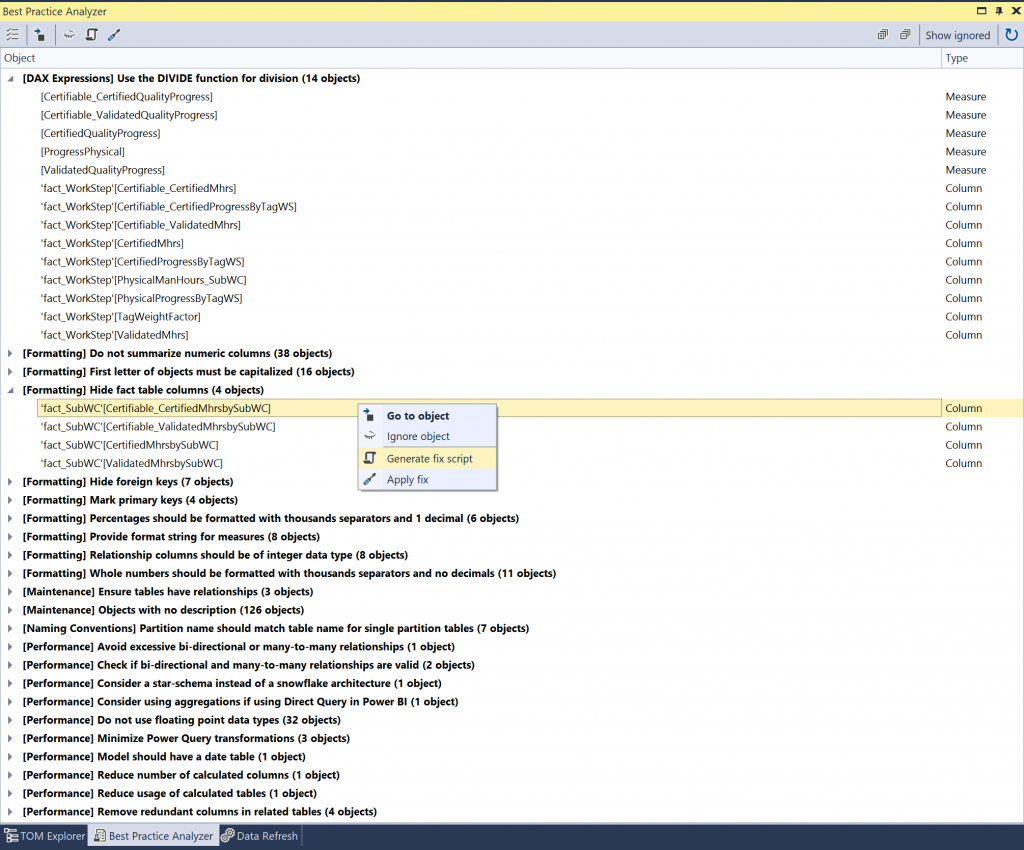

There is no need to remind that optimal model design is crucial to delivering an efficient and scalable solution. Coming to the rescue of quickly assessing those data models, the Best Practice Analyzer within Tabular Editor which is an open-source community tool developed by Daniel Otykier will check standard rules. Michael Kovalsky from the PBI CAT Team as added a lot of rules from learning of analyzing dozens of tabular models created by developers from across the world. Here an example of a very bad designed data model:

Documentation: Best Practice Analyzer

Rule’s repository: An official collection of standard Rules for use with Tabular Editor’s Best Practice Analyzer

💡 A company can define their own rules like having a naming convention well defined for all their data model objects, metadata definition, ….

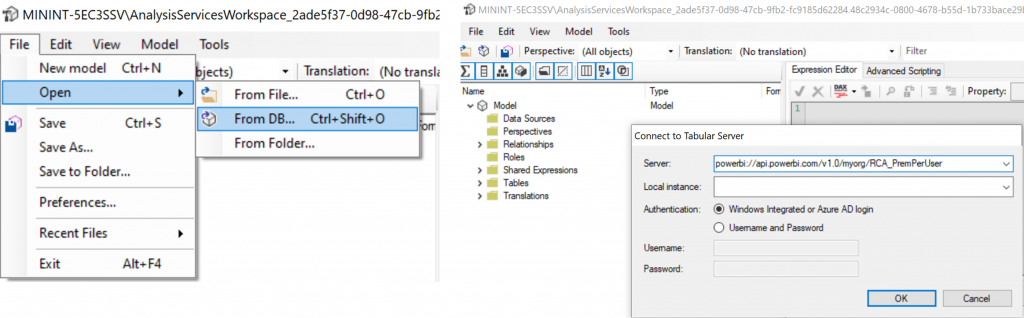

This analysis can be done on a single data model manually by connecting with Tabular Editor to the Power BI model from an open Power BI Desktop file or from an XMLA endpoint:

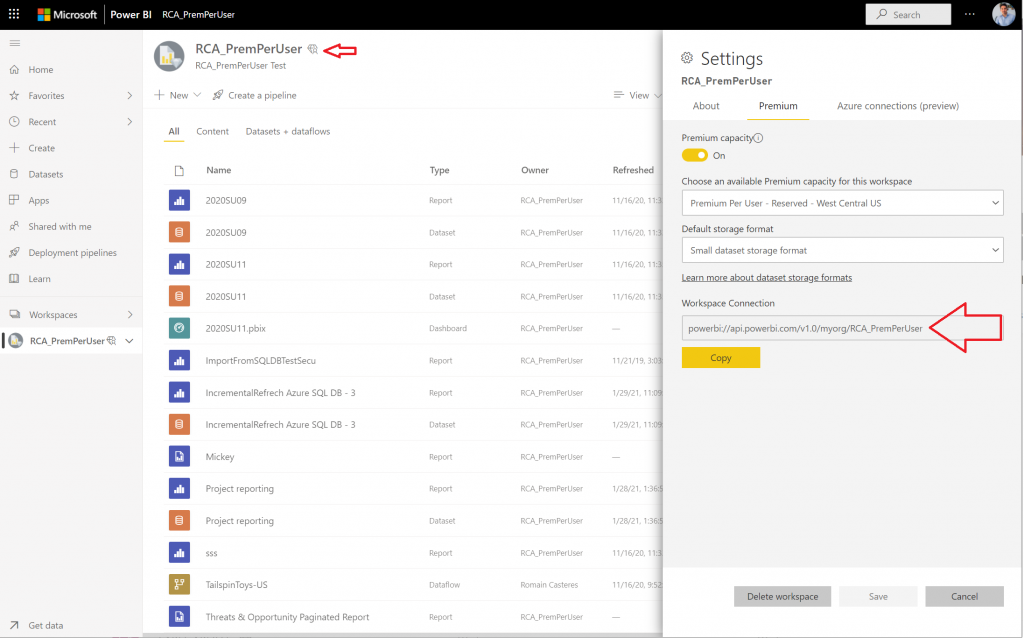

XMLA endpoints enable open-platform connectivity to Power BI datasets. The workspace connection can be found in the settings:

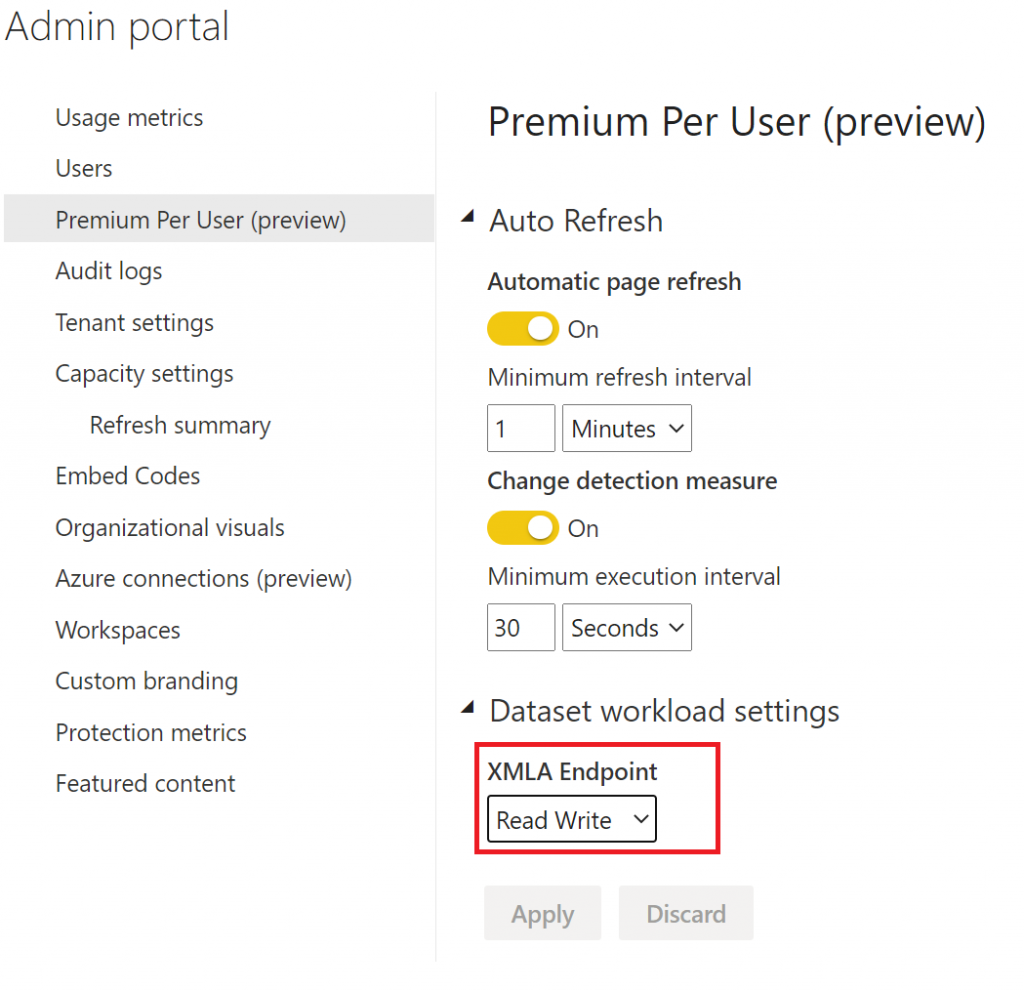

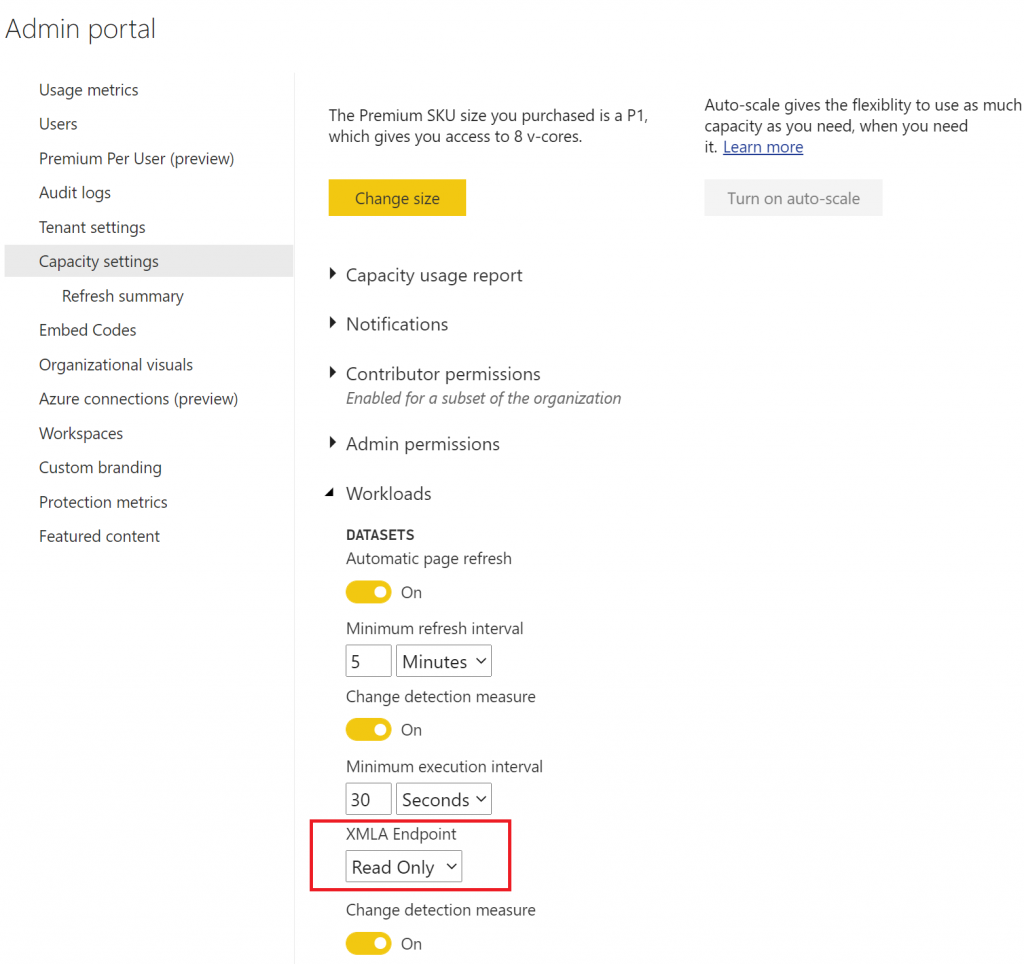

Prerequisite for XMLA Endpoint connection:

- For Premium Per User:

- For Premium Capacity:

Documentation: Dataset connectivity and management with the XMLA endpoint in Power BI

Prefer the use of Tabular Editor to edit the data model with XMLA endpoint than using Power BI Desktop and reuploading it which will be more time consuming.

While analyzing one by one Power BI data model can be done, why not analyzing multiple ones from the same workspace! In one of his article Dave Ruijter (Author at Modern Data & AI) present a solution to Check The Quality Of All Power BI Data Models At Once With Best Practice Analyzer Automation (BPAA), my article and solution is directly inspired from his solution.

2 – Running BPA for all datasets from one Workspace 💎

Why not analyzing best practice for every data model within one Workspace on Premium capacity? With this kind of solution, it could be easy to improve little by little datasets consuming too many dedicated resources and so increasing overall user adoption. Increasing the quality of the data model will give room for dataset increase, other workload type usage and could also lead to scaling down premium resources!

Why not analyzing best practice for every data model within one Workspace on Premium capacity? With this kind of solution, it could be easy to improve little by little datasets consuming too many dedicated resources and so increasing overall user adoption. Increasing the quality of the data model will give room for dataset increase, other workload type usage and could also lead to scaling down premium resources!

When Premium capacity performance issues arise, a common first approach is to optimize or tune your solutions to restore acceptable response times. The rationale being to avoid purchasing additional Premium capacity unless justified.

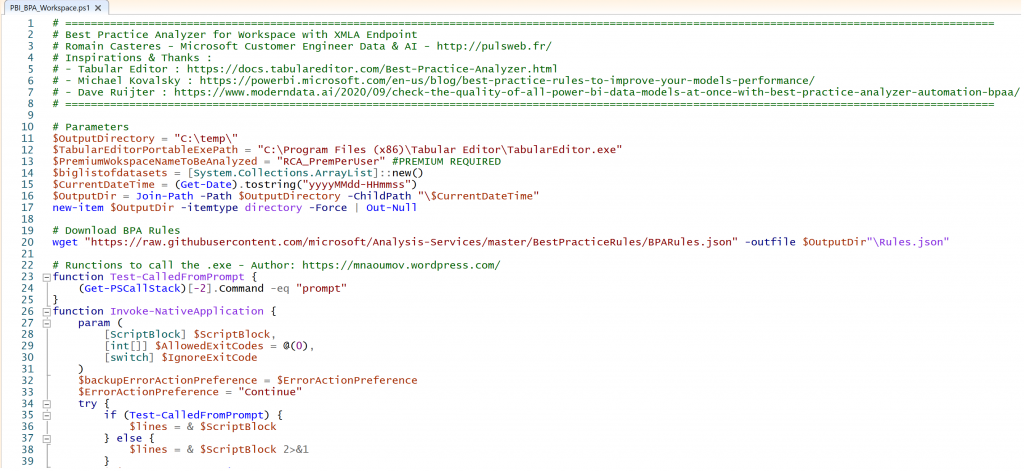

The following PowerShell script will achieve the following tasks after having entered output folder, path to the Tabular Editor executable and the name of the Premium Workspace to be analyzed:

- Download BPA set of rules

- Connect to a Power BI specific Premium Workspace

- Run the Best Practice Analyzer on top of each data model from the Workspace

- Export results of the assessment and metadata data around the dataset and the workspace

Source: PBI_BPA_Workspace.ps1

Outputted files:

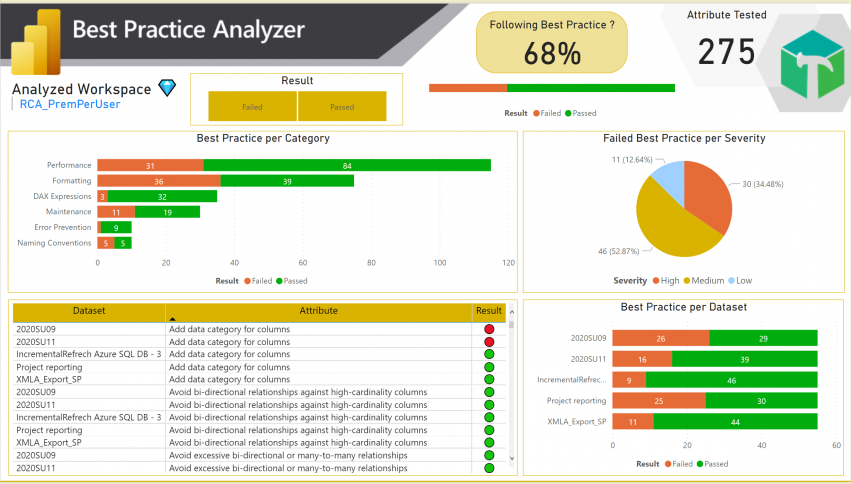

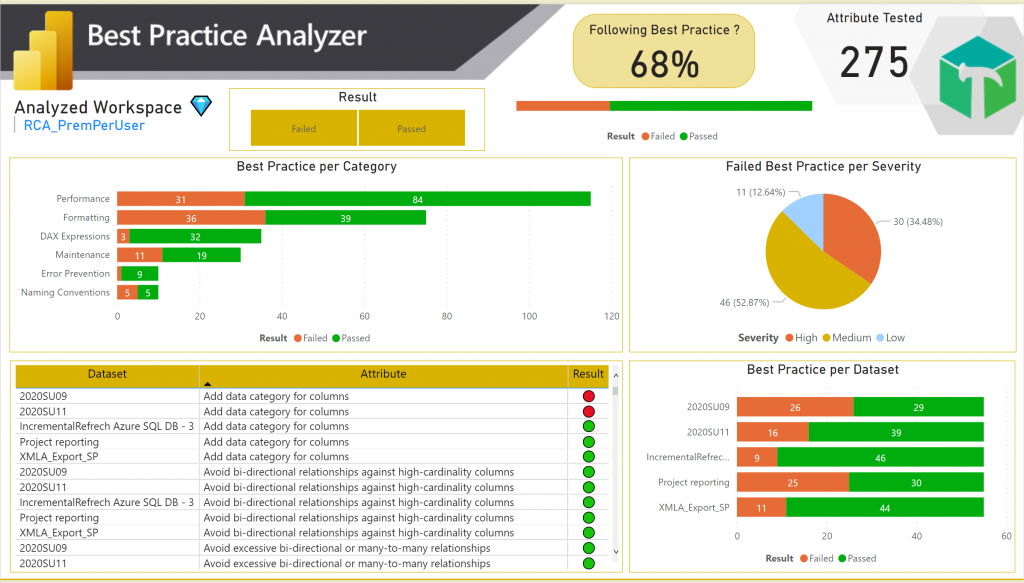

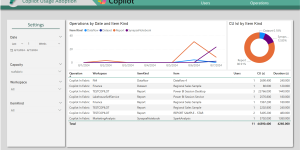

While it is very convenient to view BPA result from Tabular Editor because it can also help in resolving the findings, it is not possible to have an aggregated view of multiple datasets analysis. This is why I have quickly created the following Power BI report:

- Aggregated result of the BPA over the Premium workspace:

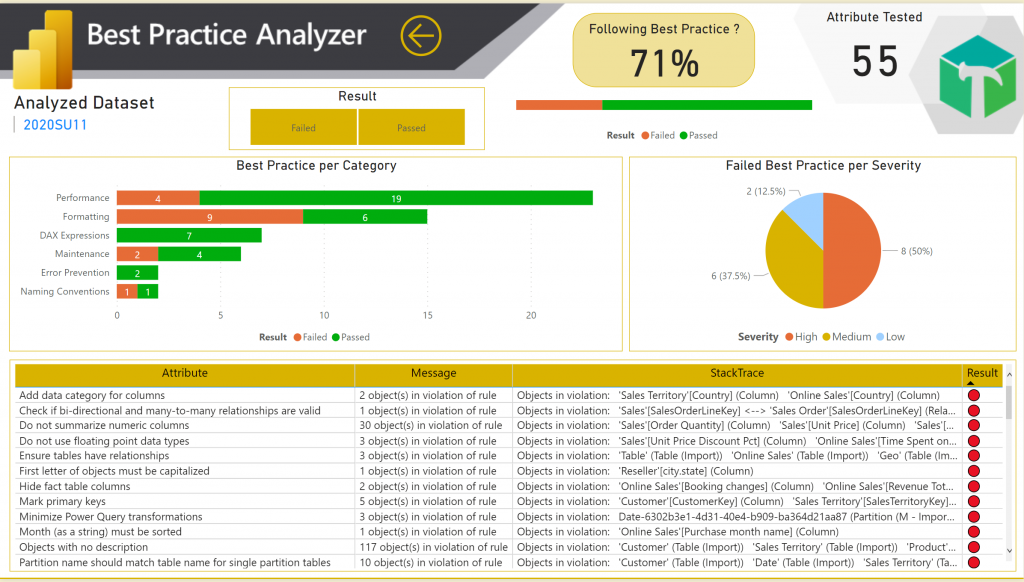

- With a Drill Through to a detail page on a specific dataset:

Source: PBI_BPA_Workspace.pbit

3 – Conclusion

Improving utilization of dedicated capacity by decreasing data model size or decreasing refresh duration has a direct positive effect on the availability of the paid resources of the capacity.

To keep high data model quality, the BPA could be scheduled, and a continuous improvement of the data model can be planned:

💡 Assessing datasets once they are in Power BI service is good but why not accessing data model before publishing them! Here is the Tabular Editor documentation to implement it: Command Line — Tabular Editor documentation.

Power BI Premium recently released a new version of Premium, called Premium Gen2, which is currently in preview. Premium Gen2 will simplify the management of Premium capacities and reduce management overhead but all those learning on optimizing data model will still be valuable.

Resources:

Pingback: Contrôler la qualité des modèles sémantiques Power BI au fil du temps - Pulsweb - Romain Casteres